What happens when Google fires its ethics expert?

Tech giants like Google are not fully aware of the power of the artificial intelligence tools they’re building, say researchers in Switzerland who work in the sector. The recent high-profile firing of a Google ethics expert puts into question whether a moral code surrounding AI is really among Big Tech’s top priorities.

“The algorithms we work on are a matter of public interest, not personal affinities.” This is what El Mahdi El Mhamdi, the only Google Brain employee in Europe working with the ethical AI team, said on Twitter about the high-profile overnight dismissal of his manager Timnit Gebru in December 2020. Google Brain is a research team at Google whose aim is to replicate the functioning of a normal human brain using deep learning systems.

Gebru is considered one of the most brilliant researchers in the field of artificial intelligence (AI) ethics. In an articleExternal link written with other researchers, she warned about the ethical dangers of large language models, which form the basis of Google’s search engine and its business.

Born in Addis Ababa (Ethiopia) to Eritrean parents, Timnit Gebru arrived in the United States at the age of 15 as a refugee during the war between Ethiopia and Eritrea. She was later admitted to Stanford University and earned a doctorate in computer vision at the Stanford Artificial Intelligence Laboratory. Her dissertation on the use of large-scale public images to perform sociological analysis achieves some success, reaching the pages of the New York Times and the Economist. Consecration comes when she co-publishes groundbreaking research demonstrating the inaccuracy and discrimination of facial recognition systems against women and people of colour. In 2018, Gebru was working for Microsoft Research when Google tapped her as a co-leader to build the ethical AI team.

Gebru warned that these models analyse huge amounts of text on the internet, mostly coming from the Western world. This geographical bias, among others, carries the risk that racist, sexist and offensive language from the Web could end up in Google’s data and be reproduced by the system. Google reacted to the paper by asking Gebru to withdraw it. When she refused, they fired her.

"Google’s short-sighted decision to fire and retaliate against a core member of the Ethical AI team makes it clear that we need swift and structural changes if this work is to continue…1\https://t.co/ojaCydyS6fExternal link

— Timnit Gebru (@timnitGebru) December 17, 2020External link

Other researchers have also discovered and highlighted the risks of uncontrolled evolution of AI systems. Alexandros Kalousis, Professor of Data Mining and Machine Learning at the University of Applied Sciences of Western Switzerland, says there’s an “elephant in the room”: “AI is everywhere and is advancing at a fast pace; nevertheless, very often developers of AI tools and models are not really aware of how these will behave when they are deployed in complex real world settings”, he warns. “The realisation of harmful consequences comes after the fact, if ever.”

Wtf @GoogleExternal link @GoogleAIExternal link??

— Lê Nguyên Hoang (Science4All) (@le_science4all) February 28, 2021External link

Before deploying any larger language models, are you confident that such a dangerous misinformation, probably ubiquitous in your training dataset, will not be repeated by your algorithms?

How does firing your ethics team help you make "responsible AIs"? https://t.co/9Fw8bOHonCExternal link

But as Gebru’s experience showed, researchers who predict those consequences and raise red flags are not always embraced by the companies they work for.

“Timnit Gebru was hired by Google to deal with AI ethics and was fired because she dealt with AI ethics. This shows how much the company actually cares about ethical issues,” says Anna Jobin, a researcher at the Humboldt Institute of Internet and Society in Berlin and an expert in new technology ethics. “If they even treat a publicly known specialist in ethical AI this way, how can Google ever hope to become more ethical?”

If a company cannot even pretend to care about its internal "Ethical AI" co-leader, how much do you think they care about the rest of us?

— Dr. Anna Jobin (@annajobin) December 9, 2020External link

In the wake of Google's outing of @timnitgebruExternal link, my two cents on #AIethicsExternal link #BigTechExternal link #IStandWithTimnitExternal link: https://t.co/xzxlaNwPvCExternal link

‘Ethics-washing’

Gebru was a symbol of a new generation of female and black leaders in the tech world, whose workforce is dominated by white men. Together with her colleague Margaret Mitchell, who was also dismissed from the company in February, she had built a multicultural team to research and promote the ethical and inclusive development of Google’s AI systems.

After setting out its ethical AI principles in a guide, Google decided in 2019 to complete its internal governance structure by establishing an independent group to oversee the development of the company’s artificial intelligence systems, the Advanced Technology External Advisory Council (ATEAC).

“This group will consider some of Google’s most complex challenges that arise under our AI Principles, like facial recognition and fairness in machine learning, providing diverse perspectives to inform our work. We look forward to engaging with ATEAC members regarding these important issues,” Kent Walker, the company’s SVP of Global Affairs, wrote in Google’s blog.

Despite these premises, the group was shut down less than two weeks after its launch due to the uproar caused by the controversial appointment of two board members, one of whom was considered an ‘anti-trans, anti-LGBTQ and anti-immigrant’ conservative, and the subsequent resignation of another member.

But Jobin says that unless the issue is handled correctly and such teams are empowered to act on their findings, “’Ethical AI’ can be an unethical force,” a purely cosmetic label to deceptively mask a thirst for business that is actually in open conflict with moral principles. Like with “green washing,” where companies tout but don’t act on environmental policies, one could speak of “ethics washing” – that is, ethical window dressing. “It would be to say: we are ethical, so stop bothering us,” explains Jobin.

Burak Emir, a senior staff software engineer who has worked for Google in Zurich for 13 years, was led to question the purpose behind the company’s ethics research after Gebru’s firing.

“Why do we have an ethics department if you can only write nice words?” the engineer wonders. “If the aim is to publish only nice, good-natured research, then let it not be said that it is done to increase knowledge. We need more transparency.”

It took me a while (sorry), but I read up on available info & came around to think that @timnitgebruExternal link's reaction makes sense. The demands formulated here are reasonable, and research leadership has to move to regain trust.https://t.co/2Wc3ImG0g7External link#ISupportTimnitExternal link #BelieveBlackWomenExternal link

— Burak Emir (@burakemir) December 6, 2020External link

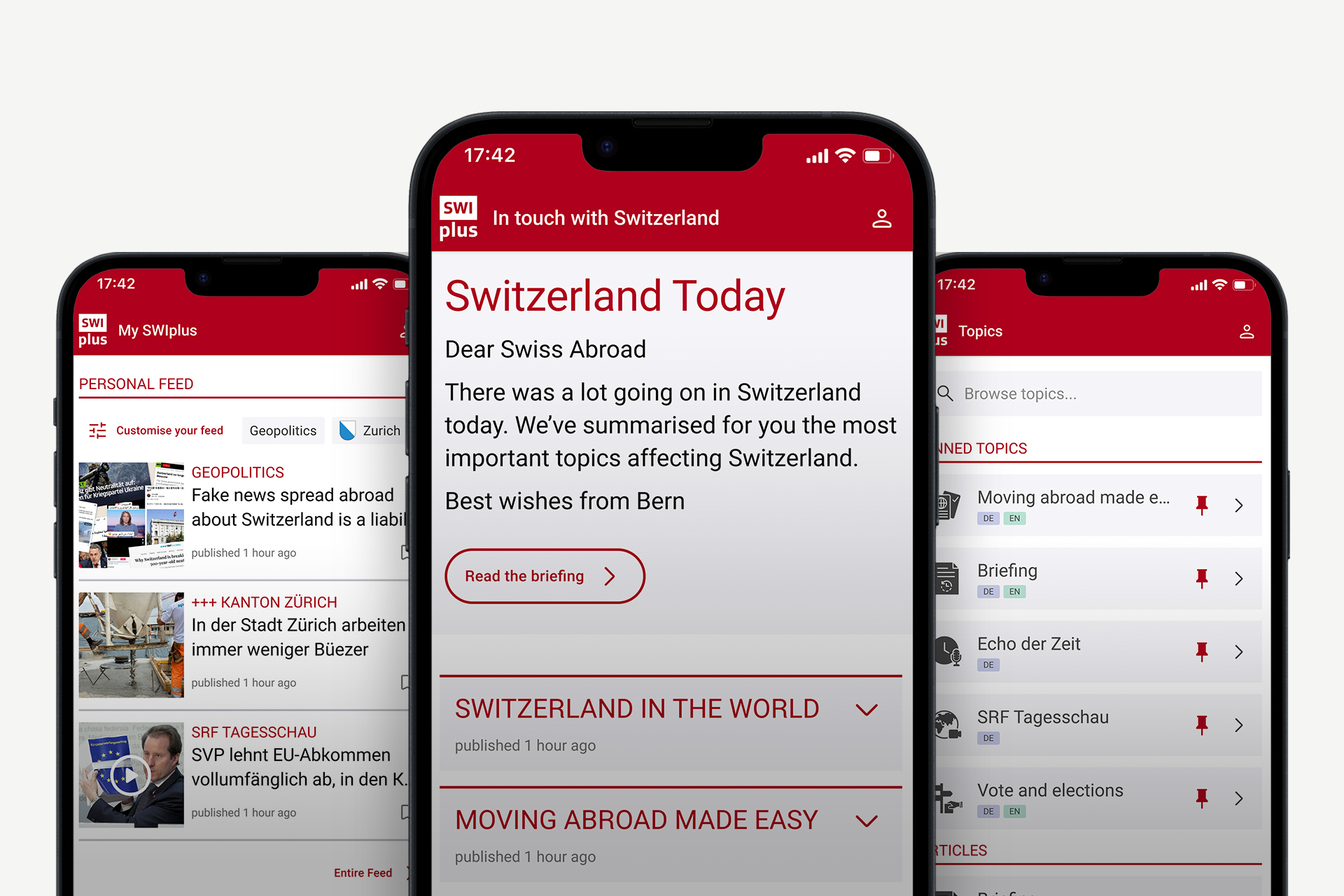

Many scientists and researchers have expressed their solidarity with Gebru, including some who live and work in Switzerland. Google’s largest research centre outside the United States is located in Zurich. Among other things, it focuses on machine learning and artificial intelligence. Emir is one of more than 2,600 of the company’s employees who have signed a petition in support of GebruExternal link and questioning the company’s approach to AI ethics.

The cowl does not make the monk

Google denies that it had ‘censored’ Gebru. When SWI swissinfo.ch reached out to Google Switzerland, its communications office referred us to official statements previously made by Google executives. One of those statementsExternal link was by Jeff Dean, head of Google AI, who said that the research co-signed by Gebru did not meet the bar for publication and that the company did not fire her but accepted her resignation; which Gebru says she never submitted.

I understand the concern over Timnit’s resignation from Google. She’s done a great deal to move the field forward with her research. I wanted to share the email I sent to Google Research and some thoughts on our research process.https://t.co/djUGdYwNMbExternal link

— Jeff Dean (@JeffDean) December 4, 2020External link

I was fired by @JeffDeanExternal link for my email to Brain women and Allies. My corp account has been cutoff. So I've been immediately fired 🙂

— Timnit Gebru (@timnitGebru) December 3, 2020External link

Roberta Fischli, a researcher at the University of St. Gallen who also signed the petition supporting Gebru, says Google’s image has suffered within the ethics and AI community since the ethics researcher’s firing. Fischli thinks that conflict of interest is inevitable when doing research that questions existing practices within a company, so there was some risk involved from the beginning.

“In theory, most companies like having critical researchers. But in practice, it inadvertently leads to a clash of interests if the researchers start criticising their employer,” she says. Fischli notes that researchers who seek to do groundbreaking work on AI ethics often don’t have much of a choice and end up in private companies because that’s where the most resources are concentrated. “So they try to understand certain mechanisms better and change things from the inside, which doesn’t always end well.”

The Web giant has continued to do business seemingly undisturbed after Gebru’s firing, but something is stirring. A group of the company’s employees in the US formed the first union in history within a large multinational technology company, the Alphabet Workers UnionExternal link, in January. Subsequently, Google employees around the world formed a global trade union alliance in 10 countries, including the UK and Switzerland.

Hard to understate the importance of this against the background of @timnitGebruExternal link’s forced departure from #GoogleExternal link’s AI Ethics team. Time to take ethics, diversity and labor law – and your own corporate slogan seriously, Google! #ISupportTimnitExternal link https://t.co/H0AORkHKE0External link

— Roberta Fischli (@leonieclaude) December 3, 2020External link

The elephant in the room

But the influence of Google and the so-called GAFA group (acronym for Google, Amazon, Facebook and Apple) extends far beyond the confines of the companies’ offices. What such tech giants are doing and planning also dictates the global academic research agenda. “It’s hard to find research in large academic and research institutions that isn’t linked or even funded by big tech companies,” says Alexandros Kalousis of the University of Applied Sciences of Western Switzerland.

Given this influence, he finds it crucial to have independent and “out-of-the box” voices highlighting the dangers of uncontrolled data exploitation by tech giants.

“This is the big problem in our society”, Kalousis says. “Discussions about ethics can sometimes be a distraction” from this pervasive control by Big Tech.

Can research be as independent as it should be in order to objectively question the risks of a large-scale deployment of AI technologies? Not really, it seems. The problem goes beyond Google and concerns all companies on the market that are deploying AI systems with no rules and limits. For us, this means that technology is dictating what’s ethically acceptable and what’s not and this is shaping our lives and mindset.

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

Join the conversation!