Is artificial intelligence really as intelligent as we think?

Computers are increasingly taking important decisions for us. Should we be letting them? A team from the Swiss Idiap Research Institute shows why much of machine intelligence is just an illusion.

Can machines think? This is the opening question of British mathematician Alan Turing’s most famous paperExternal link. Published in 1950, it laid the foundation for the conception and definition of artificial intelligence (AI). To answer his question, Turing invented the “imitation game”, still used today to judge the intelligence of a machine.

The game, which later became known as the Turing Test, involves three players: player A is a man, player B is a woman and player C, who plays the role of the interrogator, is of either gender. The interrogator is unable to see the two other players and poses a series of questions in writing, to determine which player is the man and which is the woman. The purpose of the male respondent is to deceive the interrogator by giving misleading answers, while the woman has to facilitate her correct identification.

Now, imagine player A is replaced by a computer. Turing wrote that if the interrogator is not able to distinguish between a computer and a person, the computer should be considered an intelligent entity, since it would prove to be cognitively similar to a human being.

Seventy years later the results to the test are astounding. “At the moment, there is not a single artificial intelligence system, and I mean not a single one, that has passed the first Turing Test,” says Hervé Bourlard, who directs IdiapExternal link, which specialises in artificial and cognitive intelligence.

More

The ethics of artificial intelligence

Neither artificial nor intelligent

Having fallen into disuse as early as the 1970s – since it was considered unfashionable and ridiculous – the term artificial intelligence came back into fashion in the 1990s “for advertising, marketing and business reasons”, claims Bourlard, who is also a professor of electrical engineering. “But without any real progress other than in the power of mathematical models,” he adds.

He remains sceptical about the term artificial intelligence and how it’s being used today. He says there is no such thing as “artificial intelligence” as no system reflects the slightest human intelligence. Even a two- or three-month-old baby can do what an AI machine would never be able to do.

Take a glass of water on a table. An infant knows full well that, if the glass is turned upside down it becomes empty. “That’s why they enjoy tipping it over. No machine in the world can understand this difference,” Bourlard says.

What this example demonstrates also holds true for common sense, a distinctive human ability that machines cannot – and according to Bourlard – never will be able to imitate.

The intelligence is in the data

AI is, however, well established in businesses across many sectors and is increasingly contributing to decision-making processes in areas such as human resources, insurance and bank lending, to name but a few.

By analysing human behaviour on the internet, machines are learning who we are and what we like. Recommendation engines then filter out less relevant information and recommend films to watch, news to read or clothes we might like on social media.

But that still doesn’t make artificial intelligence intelligent, says Bourlard, who took over at Idiap in 1996 and prefers to talk about machine learning. He says there are three things that make AI powerful in its own way: computing power, mathematical models, and vast and ubiquitous databases.

Increasingly powerful computers and the digitisation of information have made it possible to improve mathematical models enormously. The internet, with its infinite databases, has done the rest, pushing the capabilities of AI systems further and further.

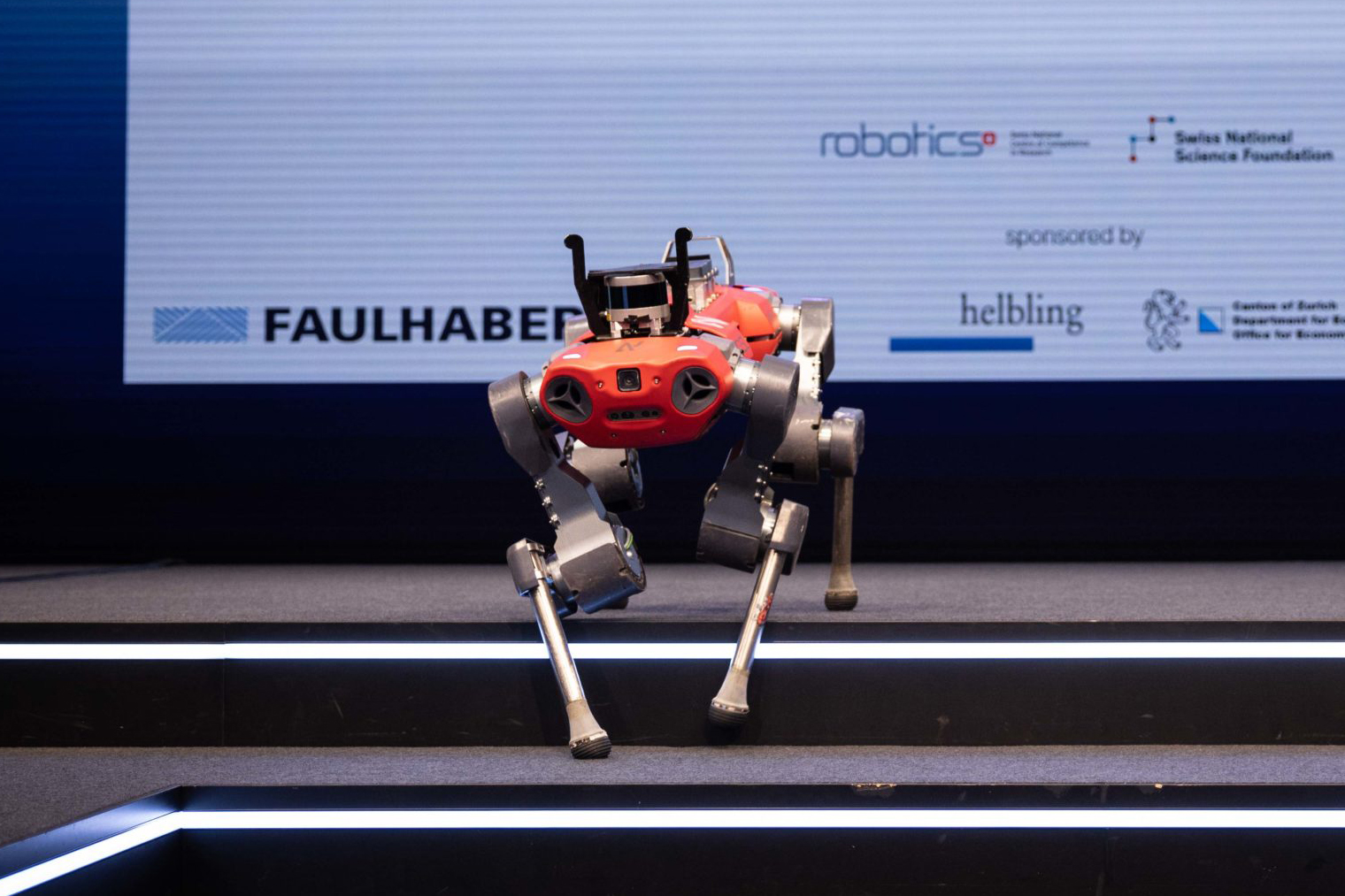

Watch how Idiap researchers show the public how AI works and what it can do:

Idiap is trying to show the public how central data is to AI systems through a number of demonstrations, which will be on display at the Museum of the HandExternal link in Lausanne from April 1.

For example, the public will have the opportunity to see first-hand how the AI-based technology behind the cameras in our smartphones can significantly improve the quality of low-resolution images or, on the contrary, worsen it, depending on the data it has been trained with.

This is not an easy process: it requires a lot of good data which must be annotated, or “labelled”, by a human being (even if not entirely manually) to make it comprehensible to a machine.

“We’re not dealing with something that has a life of its own, but with a system that is fed data,” says Michael Liebling, who heads Idiap’s Computational Bioimaging Group.

This does not mean AI is perfectly safe. The limits of machines depend on the limits of data. This, according to Liebling, should make us think about where the real danger lies.

“Is the danger really in a sci-fi machine taking over the world? Or is it in the way we distribute and annotate data? Personally, I think the threat lies in the way the data is managed rather than in the machines themselves,” he says.

More

‘Artificial intelligence won’t replace humans’

More transparency needed

Tech giants such as Google and Facebook are well aware of the power of models fed massive amounts of data and have built their businesses around it. This aspect, along with the automation of some human tasks, is what worries the scientific community the most.

Former Google researcher Timnit Gebru was even fired for criticising the very large and inscrutable linguistic models underlying the world’s most used search engine.

+ Google fires its ethics expert?

The limitation of machine-learning models is that they do not show – or at least not yet – a reasoning ability equal to ours. They are able to provide answers but not to explain why they came to such a conclusion.

“You also have to make them transparent and understandable for a human audience,” says André Freitas, who heads the Reasoning & Explainable AI research group at Idiap.

The good news, he says, is that the AI community, which in the past focused primarily on improving the performance and accuracy of models, is now also pushing to develop ethical, explainable, safe and fair models.

Freitas’s research group is building AI models that can explain their inferences. For example, their model would be able to not only predict and recommend when patients who are Covid-19 positive should be admitted to intensive care units, but also explain to the medical staff using the model how these conclusions were reached and what the possible limitations of the model are.

“By building AI models that can explain themselves to the external world, we can give users the tools to have a critical view of the model’s limitations and strengths,” Freitas says. The aim is to turn complex algorithms and technical jargon into something understandable and accessible. He recommends that, as applications of AI start to permeate our lives, “if you come across an AI system, challenge it to explain itself”.

Illusions of intelligence

AI is often described as the driver behind today’s technologies. So, naturally, it arouses excitement and high expectations. Computers using neural network models, which are inspired by the human brain, are performing well in areas that were previously unthinkable.

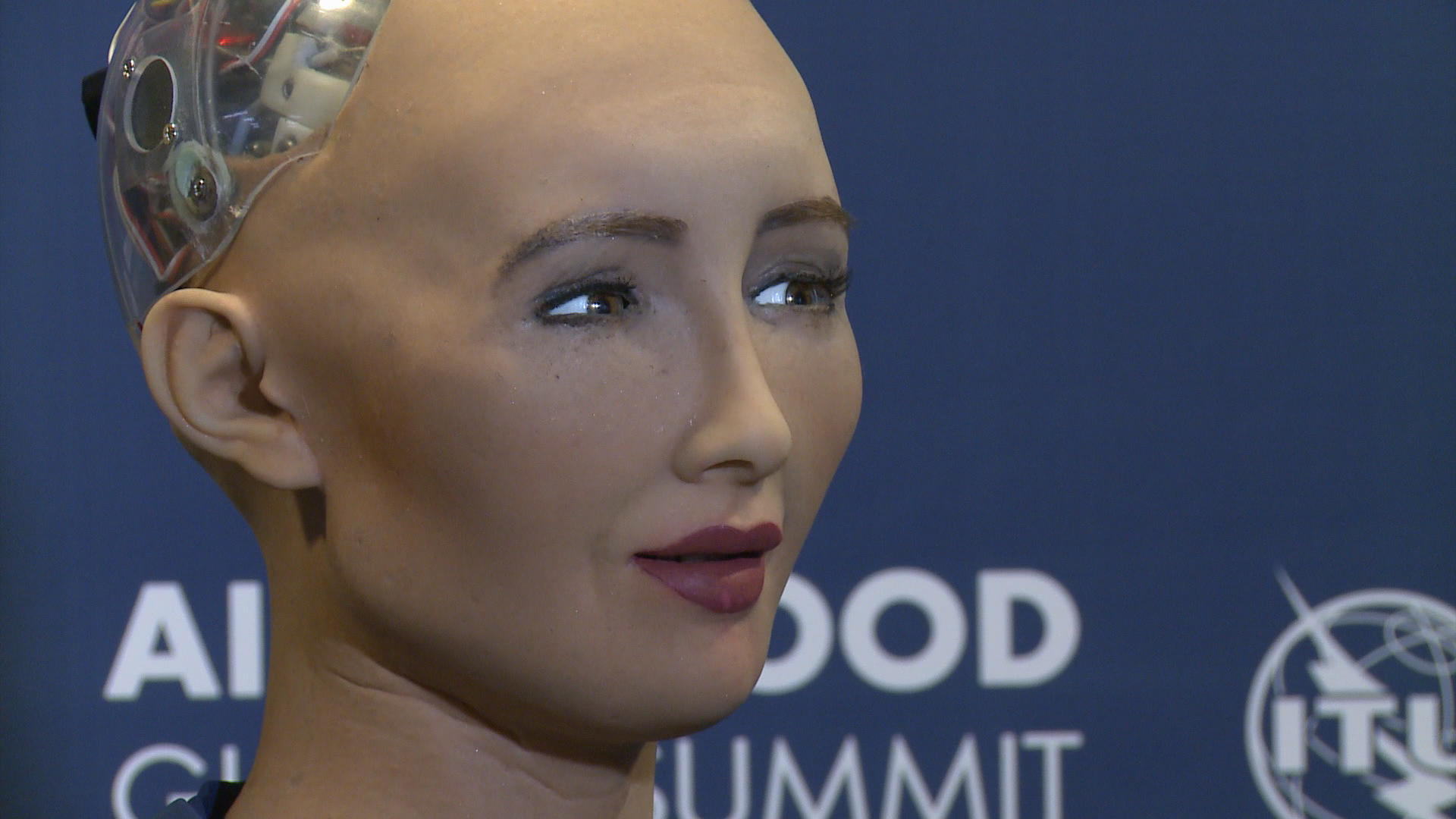

“This has made us believe that AI will become as intelligent as we are and solve all our problems,” says Lonneke van der Plas, who leads the Computation, Cognition and Language group at Idiap.

She gives the example of the increasingly advanced capabilities of language tools, such as virtual assistants or automatic translation tools. “They leave us speechless, and we think that if a computer can engage in something as complex as language, there must be intelligence behind it.”

Language tools can imitate us, because the underlying models are able to learn patterns from a large volume of texts. But if we compare the abilities of a (voice-activated) virtual assistant with those of an average child, when they talk about a paper plane, for example, the tool needs a lot more data to get to the child’s level – and it will struggle to learn ordinary knowledge, such as common sense.

“It’s easy to deceive ourselves, because sounding like a human being does not automatically mean there is human intelligence behind it,” Van der Plas says.

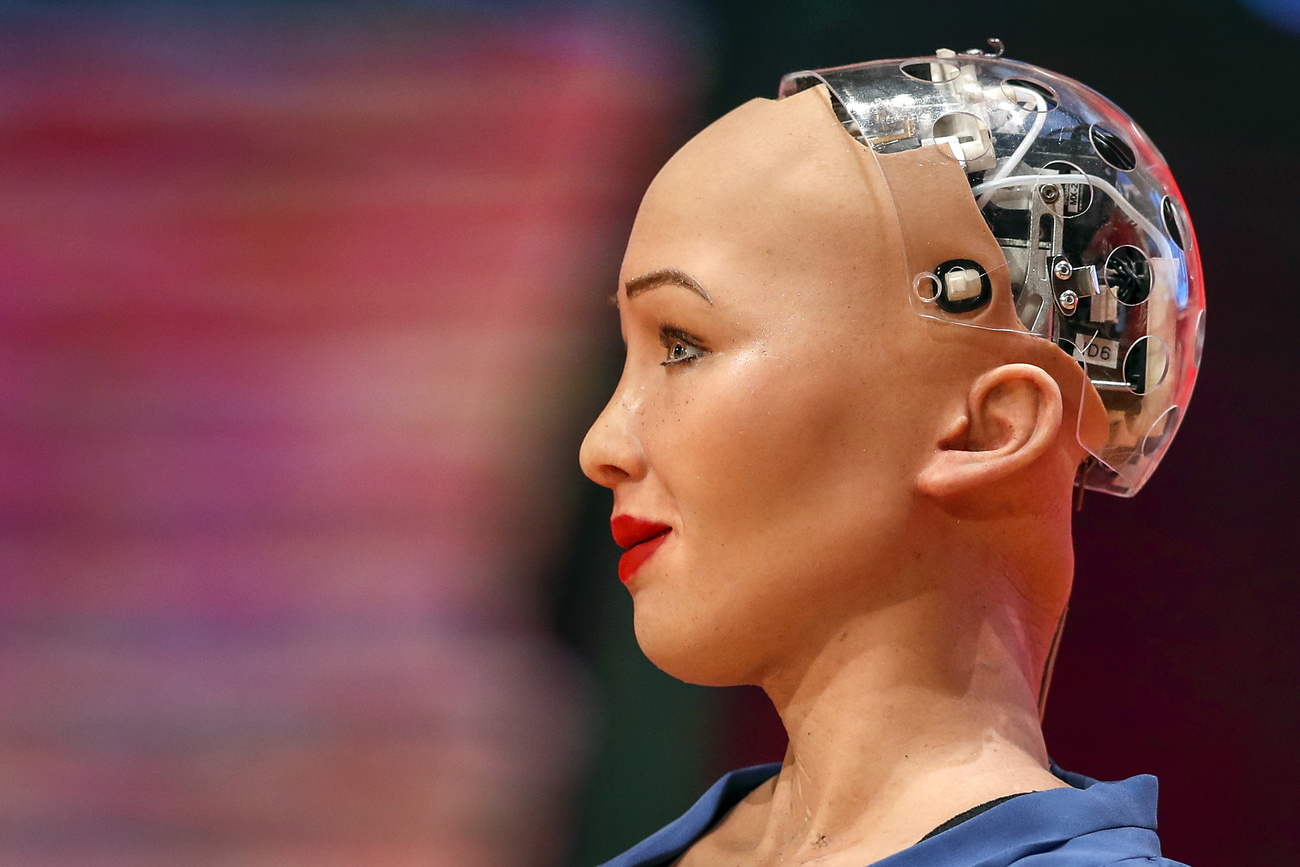

After all, as Alan Turing said 70 years ago, it is not worth trying to humanise a “thinking machine” through aesthetic embellishments. You can’t judge a book by its cover.

More

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.