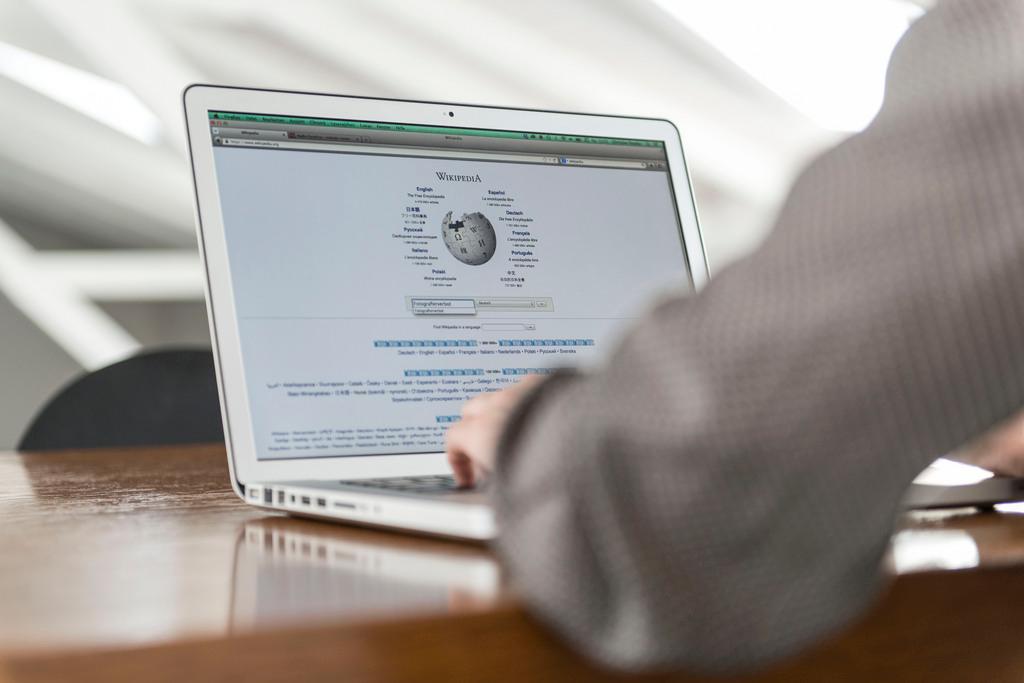

Switzerland-based research fills Wikipedia’s language gaps

A professor at Lausanne’s Federal Institute of Technology (EPFL) has helped develop a platform to identify and generate key Wikipedia pages that are currently missing in minority languages.

The new tool combines machine learning with human expertise to make the crowdsourced online information platform available to more people.

As an English speaker, chances are you can rely on Wikipedia to provide information when you want to do some light research, check a fact, or prove a point. But despite the online encyclopaedia’s 40 million pages in nearly 300 languages, significant gaps in coverage exist for less-spoken tongues – like Switzerland’s Romansh – which can lack basic or essential entries like “climate change” or “the universe”.

For Wikipedia editors, it can be difficult to know how to prioritise the translation of pages into different languages based on the relative cultural importance of each topic.

Robert West, head of the EPFL’s Data Science Lab, decided to fix that using science. He and his colleagues have developed a machine learning algorithm that scans Wikipedia to identify and rank by importance missing page topics for different languages.

The technology could be useful for Swiss Wikipedia users, especially since only 3,400 articles are available in Romansh compared to roughly two million each in French and German.

Computer analytics plus human knowledge

To create an objective “importance” ranking for missing Wikipedia articles, the algorithm predicts how many visits a page would theoretically receive in a given language, based on visits to articles in other languages.

“To avoid ethnocentric biases, we predicted page statistics by taking all languages into account, and the machine learning algorithms then figured out the weighting to apply to each language,” West explained in an EPFL press statement. As an example, he said that it’s more important to take Japanese Wikipedia use into account than English when predicting the impact of a particular page in Chinese.

The neutral rankings generated by the algorithm are then posted as recommendations for volunteer editors on a platform called Wikipedia GapFinderExternal link, which was developed in collaboration with researchers from Stanford University and the Wikimedia Foundation.

Based on their language skills and interests, these volunteer editors can then generate the missing pages themselves, with the help of an online translation tool.

“Human intervention is still required to meet Wikipedia’s publication standards, since machine translation is not yet up to scratch,” West added.

Since being launched in a beta version about a year ago, the Wikipedia GapFinder platform has generated about 10,000 new Wikipedia articles. West is now working on a continuation of this project aimed at adding more detailed information to existing articles.

In compliance with the JTI standards

More: SWI swissinfo.ch certified by the Journalism Trust Initiative

You can find an overview of ongoing debates with our journalists here . Please join us!

If you want to start a conversation about a topic raised in this article or want to report factual errors, email us at english@swissinfo.ch.